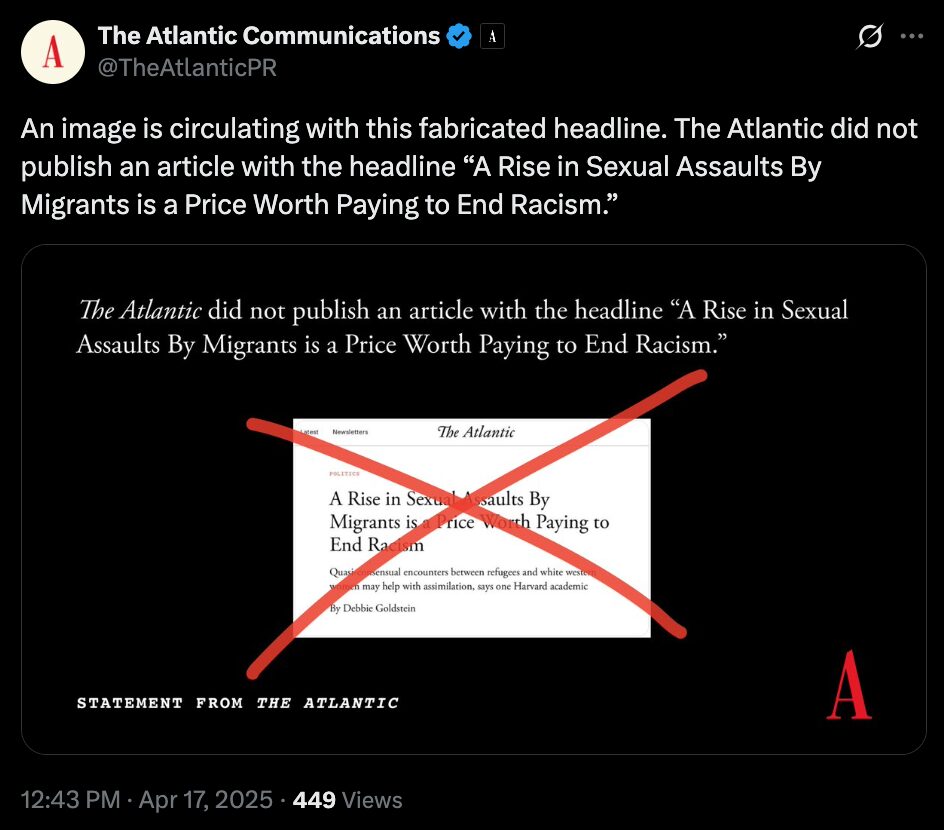

Misinformation, Automation, and Dehumanization

The term “bot” has become a catchall insult in modern political discourse, used to discredit online accounts as inauthentic or manipulative. But the reality of political botnets is far more complex—and their impact on our perception of public opinion is far more insidious. To truly understand botnets, we must explore their origins, dissect their modern equivalents, and examine how they manipulate information to shape our beliefs and behaviors.

What is a Botnet? Origins in Email Bombing Campaigns

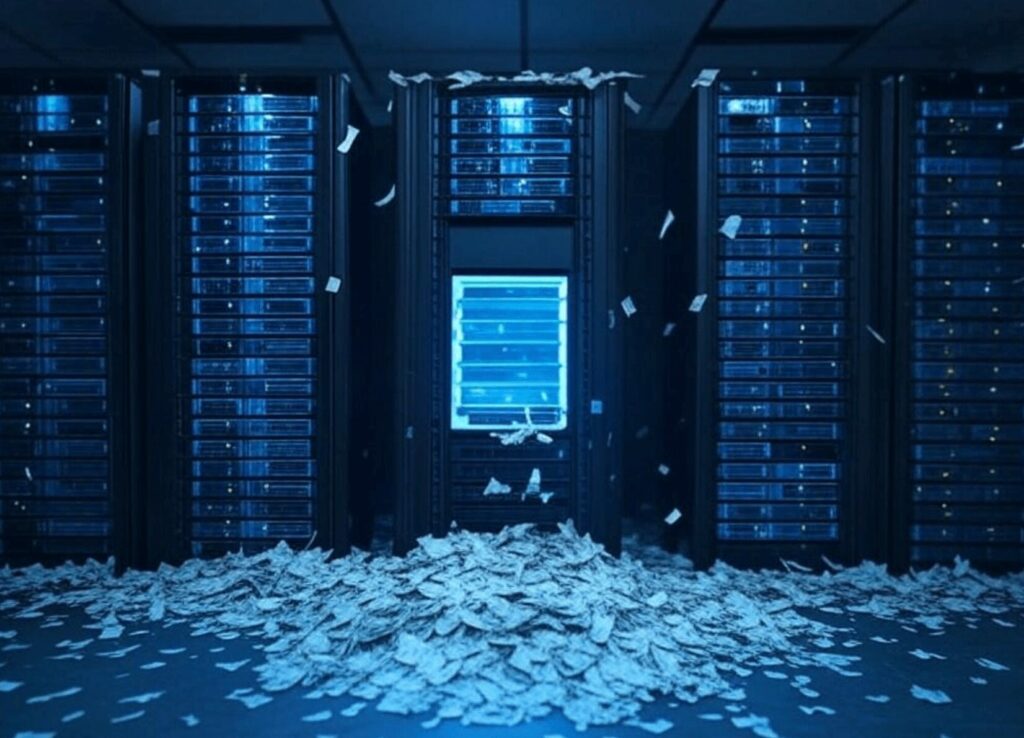

A botnet originally referred to a network of computers infected with malicious software and controlled remotely by a single operator. These networks were first used in the early days of the internet for email bombing—overwhelming targets with spam or malicious emails as a form of harassment or disruption.

Today, botnets have evolved into sophisticated tools of misinformation. They amplify messages, suppress dissent, and create the illusion of public consensus on social media. However, not all bot activity relies solely on automation; real people often run many so-called ‘bots,’ combining human strategy with automation to achieve their goals.

The Modern “Bot” on Social Media: Automation, Humans, and Everything in Between

The modern botnet is no longer just a network of automated accounts. It’s a spectrum of behaviors and tactics that blur the line between human and machine:

- True Botnets (Automated Networks):

Fully automated systems using scripts or APIs to mass-publish content. These botnets clog social media feeds with repetitive posts, artificially inflating trends and falsely labeling content as “popular” or “organic” in the minds of viewers. - Semi-Automated Accounts:

These accounts combine automation with human oversight. For example, operators may schedule posts via software while manually interacting with users to appear authentic. - Sock Puppets or Burner Accounts:

Real people operating fake accounts to spread narratives or engage in discussions under false identities. These are often used by public figures, journalists, or political operatives to test ideas or spread misinformation. - CIB Networks (Coordinated Inauthentic Behavior):

A term coined by Facebook, CIB refers to groups of accounts—human-operated, automated, or both—that coordinate to manipulate public discourse. These networks create the illusion of widespread support or opposition to a topic or individual. - Ghost-Operated Public Accounts:

Public figures or organizations whose social media accounts are run by employees or contractors. While not bots, these accounts often mislead followers into believing they are engaging directly with the individual in question.

How Botnets Clog Information and Manipulate Perception

One of the most dangerous impacts of modern botnets, for instance, is their ability to clog information channels. Moreover, by flooding social media with repetitive, low-quality, or incendiary content, botnets effectively create the illusion of mass engagement. This tactic misleads users in several ways:

- False Legitimacy Through Trending Content:

Social media platforms highlight trending or highly viewed content, often using algorithms that don’t distinguish between organic engagement and manipulation. Botnets exploit this, making nonsense or propaganda appear popular and legitimate. - Manufactured Public Opinion:

When users see a hashtag trending or a narrative going viral, they often assume it reflects genuine public sentiment. In reality, this “popularity” is often the result of paid bot activity, designed to distort the perception of public opinion. - Information Overload:

By flooding feeds with noise, botnets drown out authentic voices and distract users from substantive discussions. This creates a chaotic environment where it’s difficult to discern truth from fabrication.

Foreign Influence and the Reality of Manipulation

It would be naive to ignore the fact that, for example, foreign nations like Russia, North Korea, and China use similar techniques to influence American discourse. Furthermore, these countries clearly recognize how effective botnets and misinformation campaigns can be at not only dispelling opposition to their preferred narratives but also encouraging divisive ideas to take root in American society.

For example, foreign operators may amplify polarizing topics to create confusion and division, weakening public trust in institutions or fostering hostility between ideological groups. However, this reality is often misrepresented in the U.S. by lawmakers and media:

- Exaggerated Fears About TikTok:

Critics frequently accuse platforms like TikTok of serving as tools for Chinese spying. However, they often fail to articulate these accusations clearly or provide substantive evidence. TikTok does not use ‘backdoors’ to access users’ phones, and the notion of the Chinese government surveilling Americans en masse through dance videos and viral trends remains far-fetched.

Ironically, American corporations and CIB networks are far more likely to manipulate TikTok users than the Chinese government. Although foreign influence exists, it does not appear as pervasive or sinister as it is often portrayed. - Hypocrisy in U.S. Narratives:

The U.S. government itself, for instance, engages in similar tactics by using social media to amplify its own narratives and suppress dissent. Meanwhile, the outrage over foreign interference often serves as a convenient distraction from the fact that domestic entities—such as political parties, corporations, and advocacy groups—are, in reality, the biggest players in manipulating public opinion on social media.

Foreign nations do engage in these practices, but Americans conduct the majority of botnet activity targeting their fellow citizens. Political campaigns, corporate advertisers, and coordinated smear campaigns often drive this activity.

Who Funds Botnets? Follow the Money

Botnet campaigns are not organic. Understanding who funds these operations is key to recognizing their purpose:

- Funded by Apparent Targets:

In some cases, botnets are paid to attack their own funders as part of a distraction strategy. This self-smear tactic shifts attention away from genuine controversies or criticism by creating controlled, performative outrage. For example, a political figure may fund a botnet to attack their campaign, making themselves look like a victim while diverting attention from more damaging issues. - Funded by Beneficiaries of the Attack:

More commonly, however, botnets are funded by individuals or organizations who stand to gain from the chaos. For example, this could include corporations, political operatives, or advocacy groups seeking to suppress dissent, amplify their message, or, alternatively, destabilize their opponents.

Reframing the Conversation: Beyond “Bots”

The term “bot” has become a shorthand insult, but it often oversimplifies and dehumanizes the reality of online manipulation. However, not every suspicious account is an automated program, and not every harmful narrative is spread by machines. To better understand the landscape, let’s reclassify and name the types of activity we encounter:

- True Botnets: Fully automated networks mass-publishing content.

- Amplifiers: Semi-automated accounts designed to boost messages or trends.

- Sock Puppets: Fake accounts operated by real people to spread narratives under false identities.

- Ghost Operators: Accounts attributed to public figures but run by teams.

- CIB Networks: Coordinated groups of accounts—human or automated—designed to manipulate discourse.

Think Critically About Online Narratives

Understanding the mechanics of botnets, however, is only half the battle. Therefore, to resist their influence, we must consistently approach online content with a critical eye:

- Question Trends:

Just because a hashtag is trending doesn’t mean it reflects organic public opinion. Look for signs of coordinated activity, such as repetitive posts or sudden surges in engagement. - Verify Sources:

Anonymous accounts should always be approached with skepticism. Consider the motivations behind the narratives they promote. - Don’t React Emotionally:

Botnets thrive on emotional reactions. Take a step back, analyze the content critically, and seek out diverse perspectives.

Resist the Manipulation

Political botnets and CIB networks—whether foreign or domestic—are, in essence, tools of deception designed to distort public opinion and undermine trust. Specifically, by flooding information channels, they create chaos, distract from meaningful issues, and make it harder for people to think critically.

The next time you encounter a suspicious trend or narrative, therefore, remember: not everything you see online is as it seems. Instead, stay skeptical, stay informed, and resist the manipulation.